4 EECS faculty and 3 alumni to participate in Fields Institute symposium celebrating work of Stephen Cook

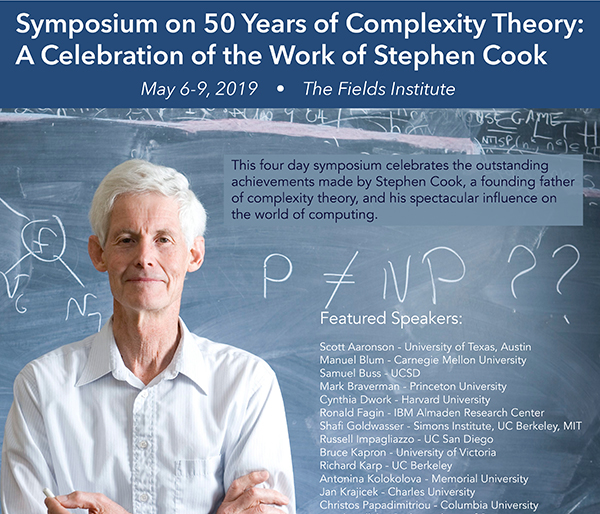

CS Prof. Shafi Goldwasser, CS Profs. Emeriti Richard Karp, Manuel Blum and Christos Papadimitriou, and alumni Michael Sipser (2016 CS Distinguished Alumnus, PhD ’80, advisor: Manuel Blum), Scott Aaronson (CS PhD ’04, advisor: Umesh Vazirani), and James Cook (CS PhD ’14, advisor: Satish Rao) will all be speaking at The Fields Institute for Research in Mathematical Sciences Symposium on 50 Years of Complexity Theory: A Celebration of the Work of Stephen Cook. The symposium, which will be held May 6-9, 2019 in Toronto, Canada, celebrates 50 years of NP-Completeness and the outstanding achievements of Stephen Cook and his remarkable influence on the field of computing.